My project the ‘Sound of Social Networking’ has been an interesting exploration into generative sound and communication. The project was originally aimed at meeting five keywords as follows:

• Generative Art

• Digital Performance

• Dadaist poetry

• Social Networking

• Sound/Music Production

In terms of my project and generative art I feel there is a close link as the audio and visuals are generated from the text provided by Facebook users. This therefore also makes the process part of my project quite strong. My project can also be considered a digital performance, as it is live updating and so a performance, and the means by which it is created is all digitally based, particularly in terms of the generative element. I have also managed to meet the social networking and sound production keywords for my project as these are at the centre of how my project works. The link with Dadaist Poetry however is not as strong. The process within my project could be considered part of this, but there are not many instructions or randomised elements to project. The final outcome of my project; the audio in particular, has a Dadaist quality about it due to its rather abstract nature, but this does not necessarily make it Dadaist.

There are a number of elements to my project which could be improved in the future. Firstly the audio produced is very monotonic, notes being the same length and volume as each other. However there is other information that can be gathered by the RSS and so this could be used to improve the musical nature of the project. There is also the element of access, both to data and to the project application itself. The application should be able to access any data regardless of the RSS format so that it can be used for multiple feeds rather than just the one that I have developed. This way it would be more functional and open for interpretation than it is at the moment. It would also be good if the application was adjoined to its data source online so that contributors can see how what they write effects the audio produced.

In terms of meeting the original process of communication that I originally had planned I feel that this project fell a little short. Conversation was not developed or emulated that well in my project, but instead other developments were discovered. My project has the ability to represent instantly the most used length of word at any given time, and displays communication musically, in the form of a type of written score. It also demonstrated an interesting openness of thought and how this can lead to themes, such as emotion in the textual content gained.

Overall I am happy with the project that I produced and have, through development, discovered a number of interesting features and possibilities of my project that I did not originally plan for, but that in my opinion make my project a success.

Monday, 26 April 2010

IDAT210 - Development Possibility: Webpage Music

After creating my finished piece for my project it occurred to me that there is another step that the project could take which would re-align its general concept but would also be a good and interesting development. This idea is centered around the idea of 'webpage music'. Due to the way that my project works it is easy to use the same system for any RSS feed url, and so it would be easy enough to make the project dynamic so that users could provide a url which is then turned into audio. In this way music could be created for any RSS feed that the user provides. This generalisation to any RSS feed means that the project would be more focused on the concept of any web feed being turned into sound and so the project could be classed as a webpage music generator, developing the sound of the internet, rather than simply a social networking page.

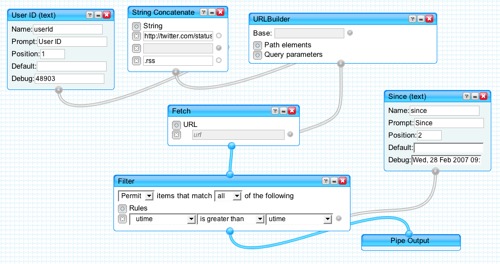

Being that this development should not be too difficult I decided I would try to create this version of my project as shown below:

Being that this development should not be too difficult I decided I would try to create this version of my project as shown below:

However upon creating this I did come across a problem with this set up in that the system I was using was looking for the titles of posts rather than the content. This is ok when using the Facebook posts as title and contents of the posts are the same, but in most RSS/ATOM feeds they are different, such as with these blog posts. Therefore when using the system this way users would only get a musical representation of the titles of elements, and not the content. As well as this I also tried the system with a standard RSS feed from Microsoft which did not seem to work as the application is based on using a ATOM format for the data. This meant that for this version of my project to work I would have to incorporate elements which took both forms into account and which was also able to access the main content without any extraneous html data. I therefore decided that although this is a good idea for a development in the future, it would be best to keep my application in its current 'sound of social networking' state.

IDAT210 - The Finished Project

#bdat Once I had formatted and completed the production of my project application all that was left was to launch the Facebook page to the public and get them to carry out the process for my project of text creation from which my application runs. To do this I added a post to the page explaining the limitations of how the page could be used as mentioned in the Posts and Facebook RSS post. I then invited a number of people to the page and began adding them as admins whenever they joined. To ensure there were no mistakes in terms of the posts made I locked the wall off from open access so that only those who I had added as admin could write on the wall. This ensured that there are not extraneous posts that wont be taken into account.

By getting the public involved in the page this means my project application is now self perpetuating creating audio from other peoples participation in the project rather than mine alone. To find out what the sound of social networking is like at the moment download the zip folder from here:

www.veat.eclipse.co.uk/projects/FBsoundproj.zipOnce the file has finished downloading extract the folder from the zip file and run either the exe file or the app file depending on whether you are using a PC or Mac. Accept the security warnings to run the file and enjoy! If you wish to get involved in the project login to Facebook and search for Uni Project Help group, become a Fan and i'll endeavour to add you as an admin so you can contribute to the wall and see how it changes the sound of social networking.

Sunday, 25 April 2010

IDAT210 - Textbox fix

As mentioned earlier I was not sure how to deal with the text in my application as I was not sure whether it was necessary, and had problems with the amount of text in comparison to the amount of space available. I have now come up with a simple fix to this problem. Firstly I decided it would be a good idea to keep the text in the app as this gives people a chance to make comparisons between the textual data and the sound and visuals that are produced according to this. Therefore I had to solve the problem of space for each of the posts made. I decided the best way to do this is the most obvious way, in that I should add a scroll bar to the text box so that viewers of the application can scroll up and down through the various posts that are being used without having to refer to the Facebook page. I therefore looked into scrolling text boxes and found that I could use a flash UIScrollBar component for the task. This then had to be coded in after the text had been created in the AS3 code to ensure that it was relevant to the length of text. The following is how the application now looks:

Hopefully once I have got the project properly launched on Facebook the amount of text will be much more varied and larger than this and so there will be more use for the scroll bar, as well as a longer and more varied audio and visual piece.

Hopefully once I have got the project properly launched on Facebook the amount of text will be much more varied and larger than this and so there will be more use for the scroll bar, as well as a longer and more varied audio and visual piece.

IDAT210 - Public Access

In order to give my Facebook page members some understanding of what they were contributing I was planning to upload the application to the internet somewhere, either as a Facebook app, or as a page on my website which the members could navigate to. This way after posting, users could see how their contribution was used and how it changed/developed the 'sound of social networking'. However on beginning to try and implement this I came across one major flaw. Flash security settings are awkward in that on a local machine a swf needs specific access from the Flash Player to access any address outside the local machine, and that similarly when posted online the swf simply is unable to achieve any remote access to an address other than that of the server it is currently running from. This meant that to be able to post my project application online so that it would run, I would need to access the remote information somehow else other than through Flash. After doing some research I found one way to do this is to use a small PHP script to pull in the data which can then be accessed by the swf. I therefore went ahead and tried to implement this solution only to find that my web space does not support PHP and so I could not upload it to a space online in which it would work.

In order to give my Facebook page members some understanding of what they were contributing I was planning to upload the application to the internet somewhere, either as a Facebook app, or as a page on my website which the members could navigate to. This way after posting, users could see how their contribution was used and how it changed/developed the 'sound of social networking'. However on beginning to try and implement this I came across one major flaw. Flash security settings are awkward in that on a local machine a swf needs specific access from the Flash Player to access any address outside the local machine, and that similarly when posted online the swf simply is unable to achieve any remote access to an address other than that of the server it is currently running from. This meant that to be able to post my project application online so that it would run, I would need to access the remote information somehow else other than through Flash. After doing some research I found one way to do this is to use a small PHP script to pull in the data which can then be accessed by the swf. I therefore went ahead and tried to implement this solution only to find that my web space does not support PHP and so I could not upload it to a space online in which it would work. This left me with one other option. In terms of the local device security this is true for swf files but not if the application is exported as an exe or mac app file. I therefore went ahead and exported the file in these formats which can then be uploaded to my space and downloaded by others who can then run it locally on their computers. This provides a slightly long winded access for the Facebook page members and others but does allow users to view the project, which therefore meets my ultimate objective, if a little bit awkwardly.

IDAT210 - Posting with Facebook RSS

Once I had created a fully working model of my project the final stage was to check its compatibility with the Facebook page, in particular looking at fan posts and comments. Therefore to test this I used another account to add posts and comments to the Facebook page that was not an admin of the page, and the ran the application. Unfortunately upon doing this I found that the posts and comments did not show up in the feed, and so did not show up as part of the application either. However if I posted items myself (as the admin of the page)the RSS would pick the changes up. In terms of comments however, even as an admin, they would not show up on the RSS or in the application. This meant there was only one way to get the feed to pick up the necessary data, which was to ensure all users were made as admins, and that they only posted directly onto the wall. This was in all intensive purposes a major set back for the ease with which I could get data added to the Facebook wall for my application, but did have a work around so I decided to stick with what I had created so far and use the work around to make my project work. This would mean inviting people to the page and then also telling them to only post onto the wall and making them all admins. Admittedly this is more information than I ever intended to provide users of the page, but it was necessary for the project to work as a whole.

IDAT210 - Project tweeks

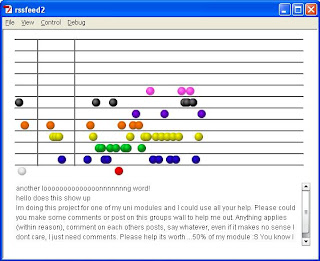

Once the body of the project had been devised and created the next step was to make sure all eventualities were covered in terms of how the project functions. In particular this covered two likely problems, words longer than 12 characters, and enough posts to run the visualisation over the stage width. To make sure these possibilities were covered I made two simple solutions. In terms of the characters issue I had already decided that only 12 notes were going to be included and so either I ignored words over this length, or continued to represent them visually but miss them in the audio. I therefore decided that the best method was to keep the word in the visuals by representing any word above 12 characters with another circle so that the visuals are as accurate as possible to the data, and so people wont get too confused. This is shown below:

In terms of the other issue regarding more circles than the stage width I decided to keep with the notation theme and simply continue the following visualisation on a new blank page, as if the page has been turned to the next page of the score. This is shown below:

This will ensure that the viewer of the project will be able to see the entire visualisation of the entire audio track rather than just a part, quickly and easily.

Another aspect that I decided to add to my project was small breaks in the audio between comments. Originally my audio was one long continuous string of sound. However being that my project is based around the idea of communication I felt that I should somehow demonstrate this through the audio that is created. I therefore added in breaks between the comments like rests in a piece of music to emphasise the different comments, and also to give a sense of some kind of communication between the different sections of music, almost as if they are responding to each other. This mimics the communication had through the comments themselves and is also a standard concept in music composition so continues the link between the project and music.

IDAT210 - Audio Visualisation

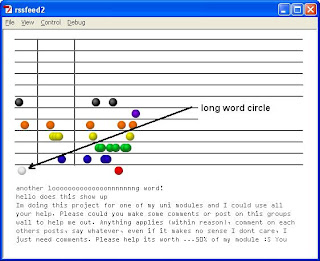

Being that I have created my audio in Flash I also felt it was important to create a visualisation for the piece as well, as this would provide the viewer of the project with a better understanding of the audio and of the project as a whole. I therefore began by thinking about what kind of visual elements I could use. I decided the best way to visualise the audio would be to have one visual element attached to each note so that when the note plays the element also comes up on screen. I didnt want the visuals to be too confusing either and so started experimenting with circles and lines to see what kind of generated visuals I could create. In the end however I decided that the best method was simply to apply different colour circles to each note and then add these to the screen. By having different colours for each note the circles would be identifiable and also would allow the viewer of the project to get a visual sense of what length words are used most by which colour shows most on screen.

Once these circles had been integrated the next challenge was in how to organise the circles on screen. I began investigating this by placing the circles randomly on stage as shown below:

Once these circles had been integrated the next challenge was in how to organise the circles on screen. I began investigating this by placing the circles randomly on stage as shown below:

However I felt this did not provide the viewer with much understanding of the information due to the random nature of this approach, and prevented the viewer from making any differentiation between each of the comments that are represented. I therefore then tried a more structured approach putting the visuals into lines, a new line representing a new post. This is shown below:

These were an improvement on the randomised version as they allowed the viewer to compare the different posts based on their colours and identify which parts of the visualisation apply to which parts of the audio. However I still felt that more could be gained from the visualisation and it could be better fitted within the context of the project. I therefore continued to experiment with the visuals until I discovered the version below:

I like this design as it displays the circles in a very music based way. The format particularly reminds me of guitar tab and how that is laid out, but it is also similar to a standard score. I therefore think this is a good design for the visualisation as it is like providing a notation of the text as well as an audio for the viewer of the project. This is why I chose to add the horizontal lines, both to better separate the individual circles and to create a stronger bond between the project and musical notation. To ensure this theme is followed through the lower notes are placed at the bottom of the visualisation and the higher notes higher up just like a standard score. Also to outline where the different comments start and finish both in the audio and visualisation a vertical line is added, again similar to a standard score, making a strong link between the project and its musical undertones. Being that the different notes are now separated by where they are placed vertically it is questionable as to whether to keep the colours as this is not standard to musical notation. However I have decided it is better to keep the colours in the visualisation for three reasons. Firstly by keeping the colours the viewer can identify a bigger difference between each of the rows and so can identify which notes apply to which colour more easily. As well as this it allows the viewer to better judge which notes are used more often, and so, which length of word is most common. Finally the colours also help the aesthetic of the visualisation and so for these reasons I have chosen to keep them within the project.

The only other element of this design that needs to be considered is the text element showing the posts that the audio and visual is referencing. I have considered including this as it can provide the viewer with something to compare the other elements with to give a better sense of what the project is achieving. However this will very quick get filled up with posts from people placing comments on the Facebook page and so it may be difficult to see and investigate the text in relation to the audio and visuals. This therefore needs to be looked at further before my project can be fully completed.

IDAT210 - Audio and words

Once I had the basic parsing working for my project I was able to begin coding in the sound elements that would turn the posts into sound. The RSS library that I found alread listed out each individual post on the page so my task was to take these and develop the code that would build my project. The first thing I did was to create the code that would give the length of each word in the post from which the sound would be produced. By using the space character in a string of text I was able to count how many characters had come before that and so calculate how long each word was. Once this was in place I had to have some sounds to apply to the word lengths.

In order for the audio produced from the project to be in some way understandable I felt that the best sounds to use would be notes from a piano, each note resembling a different length of word. I therefore recorded a set of 12 piano notes from F below Middle C to C above Middle C not including any black keys. I chose not to include the black keys as the audio being generated from the text would mean that there may be a lot of clashes between notes, and with black keys this would be especially recognisable. Therefore in order to make the generated audio as musical as possible I left the black keys out, making whatever tune is produced in the key of C. I chose to only record 12 notes in total as I felt that it would be unlikely for most words used to be any longer than this, mainly homing around 4 or 5 characters, especially being that text speak is often used on platforms like Facebook. Therefore any words included in the text above 12 characters long will not be played in the audio.

Once I had my sound files I was able to begin coding in the link between the word lengths and the notes played. Each note is played one after the other, but I have chosen not to include any other transforms so as not to make the audio too complicated.

In order for the audio produced from the project to be in some way understandable I felt that the best sounds to use would be notes from a piano, each note resembling a different length of word. I therefore recorded a set of 12 piano notes from F below Middle C to C above Middle C not including any black keys. I chose not to include the black keys as the audio being generated from the text would mean that there may be a lot of clashes between notes, and with black keys this would be especially recognisable. Therefore in order to make the generated audio as musical as possible I left the black keys out, making whatever tune is produced in the key of C. I chose to only record 12 notes in total as I felt that it would be unlikely for most words used to be any longer than this, mainly homing around 4 or 5 characters, especially being that text speak is often used on platforms like Facebook. Therefore any words included in the text above 12 characters long will not be played in the audio.

Once I had my sound files I was able to begin coding in the link between the word lengths and the notes played. Each note is played one after the other, but I have chosen not to include any other transforms so as not to make the audio too complicated.

IDAT210 - Facebook, Flash and RSS

The first stage in creating my project is to set up the Facebook location from which the textual data will be retrieved. The easiest way to get the information from this location is using RSS which will check the page for updates and then update xml code accordingly to store the information. This may seem like a simple task but it actually took some development to find all the right elements to make this happen. Originally I planned to make a Facebook group which I could invite people to, which they could then add comments to. Once I had created the group however, I found that Facebook does not provide an inbuilt RSS feed for groups. I therefore went searching for ways in which it would be possible to get a feed from the page and came across two different tools, Yahoo Pipes, and page2rss.com.

Yahoo Pipes is a small application builder which allows people to create all sorts of programs quickly and easily. One of the applications that I found here was a tool specifically for making rss feeds from Facebook which took the group id of a Facebook group and then created an rss feed for that page. I therefore endeavoured to make this work, but found that it did not seem to work very well for my group and did not provide me with the feed I wanted. I then continued searching for a solution and found page2rss.com.Page2rss.com is a service similar to that of the Pipes app I found but that takes any page url and turns it into an rss feed which checks for updates. I therefore put in the url of the Facebook group hoping that this would be ok. However on adding information ot the group wall I found that the page did not seem to be updating enough, and that when it did it provided me with a lot of extraneous information from page2rss. Being that these two methods were unsuccessful for Facebook groups I began to consider other areas of Facebook which I could use for my project. After searching through different sections of Facebook I discovered that Facebook Pages were an area of Facebook that had inbuilt RSS feeds. I therefore created a Facebook page for my project with its own RSS feed and got the url for this feed so that the data could be parsed into my Flash application.

Yahoo Pipes is a small application builder which allows people to create all sorts of programs quickly and easily. One of the applications that I found here was a tool specifically for making rss feeds from Facebook which took the group id of a Facebook group and then created an rss feed for that page. I therefore endeavoured to make this work, but found that it did not seem to work very well for my group and did not provide me with the feed I wanted. I then continued searching for a solution and found page2rss.com.Page2rss.com is a service similar to that of the Pipes app I found but that takes any page url and turns it into an rss feed which checks for updates. I therefore put in the url of the Facebook group hoping that this would be ok. However on adding information ot the group wall I found that the page did not seem to be updating enough, and that when it did it provided me with a lot of extraneous information from page2rss. Being that these two methods were unsuccessful for Facebook groups I began to consider other areas of Facebook which I could use for my project. After searching through different sections of Facebook I discovered that Facebook Pages were an area of Facebook that had inbuilt RSS feeds. I therefore created a Facebook page for my project with its own RSS feed and got the url for this feed so that the data could be parsed into my Flash application.

The next hurdle to overcome was getting this feed properly into Flash. To begin with I just tried using a stndard xml parse to get the rss data into th Flash environment as RSS is in the XML format. However this only seemed to partly work as the important information seemed to be missing from the data and so I began researching into getting RSS into Flash with AS3. After looking at a number of bits of code online I discovered that in AS2 it seemed that you could import RSS similarly to XML but that AS3 may be more difficult. I then came across a RSS/ATOM Flash library for parsing RSS to flash called NewsATOM_cs3 from http://lucamezzalira.com/2009/02/07/parsing-rss-10-20-and-atom-feeds-with-actionscript-3-and-flash-cs4/. This libary is an open source library developed from the as3sydicationlib and made compatible for Flash by Luca Mezzalira, and allows users to parse RSS 1.0, 2.0 and ATOM feeds. I therefore downloaded it and started using it for the base of my project to allow RSS parsing for the Facebook page.

Yahoo Pipes is a small application builder which allows people to create all sorts of programs quickly and easily. One of the applications that I found here was a tool specifically for making rss feeds from Facebook which took the group id of a Facebook group and then created an rss feed for that page. I therefore endeavoured to make this work, but found that it did not seem to work very well for my group and did not provide me with the feed I wanted. I then continued searching for a solution and found page2rss.com.

Yahoo Pipes is a small application builder which allows people to create all sorts of programs quickly and easily. One of the applications that I found here was a tool specifically for making rss feeds from Facebook which took the group id of a Facebook group and then created an rss feed for that page. I therefore endeavoured to make this work, but found that it did not seem to work very well for my group and did not provide me with the feed I wanted. I then continued searching for a solution and found page2rss.com.The next hurdle to overcome was getting this feed properly into Flash. To begin with I just tried using a stndard xml parse to get the rss data into th Flash environment as RSS is in the XML format. However this only seemed to partly work as the important information seemed to be missing from the data and so I began researching into getting RSS into Flash with AS3. After looking at a number of bits of code online I discovered that in AS2 it seemed that you could import RSS similarly to XML but that AS3 may be more difficult. I then came across a RSS/ATOM Flash library for parsing RSS to flash called NewsATOM_cs3 from http://lucamezzalira.com/2009/02/07/parsing-rss-10-20-and-atom-feeds-with-actionscript-3-and-flash-cs4/. This libary is an open source library developed from the as3sydicationlib and made compatible for Flash by Luca Mezzalira, and allows users to parse RSS 1.0, 2.0 and ATOM feeds. I therefore downloaded it and started using it for the base of my project to allow RSS parsing for the Facebook page.

Saturday, 24 April 2010

IDAT210 - The project

So after rethinking my strategy for my project I decided that the project would be similar to the original idea, but also more meaningful. The sound that is created through the project should be live updating to demonstrate some kind of performance, and will be generated through comments that people make on a Facebook page. The people who provide the data will not be told specifically what the project is about, or what to write in the hope that they will decide for themselves and create some kind of conversation out of it. By allowing this to happen the project will comment on the ways in which social networking can create good communication out of a lack of communication.

In terms of the audio produced this will be built to provide the 'sound of social networking' based on the length of words written by people. When posts are added the music will be changed accordingly to ensure the updating element. This project will be built using Flash and the information will be retrieved using RSS.

In terms of the audio produced this will be built to provide the 'sound of social networking' based on the length of words written by people. When posts are added the music will be changed accordingly to ensure the updating element. This project will be built using Flash and the information will be retrieved using RSS.

Wednesday, 21 April 2010

Stonehouse - An Evaluation

The Transforming Stonehouse project has been a year long investigation into the area of Stonehouse, its character, its people and how it can be improved. Ultimately the work that we have carried out over this year can be broken down into three basic sections. These are The Site, The Hertzian Space, and the Final Arduino Project. Throughout these various section the ideas that my group and I were having regarding the transformation of Stonehouse were investigated and developed, eventually leading us to an implementable project that could potentially transform Stonehouse for the better. Throughout the year our ideas have been continually developed whilst also ensuring that we kept to one particular focus. At the beginning of the year we spent time looking at Stonehouse in relation to light, which we then developed further during our long exposure investigation into the concept of communication using light. This then got transferred to our Arduino project which lead us to developing the project we have created.

In terms of our project I am quite happy with what we have managed to achieve with the Arduino. Although we have been unable to implement it properly as of yet this was to be expected and so achieving a working model as we did was good. There are however a few elements of the final project that could be improved or developed further. Firstly the concept of communication through light was good, but I feel our final project strayed slightly from this as the use of text meant that we were communicating regardless of the use of light. The only part light had to play in our project was the use of the projection and so the involvement of light with communication could have been developed further. As well as this the information we used for the project could have been better researched in terms of people's opinions and words that they felt would be opposite to the feel of Stonehouse. The words we used were chosen based on our group's personal opinion of the area and other opinions we had gathered over the year. Although these opinions seemed to be the general consensus we did not find out the opinions of the general public in the area and so we may have misjudged how people feel about Stonehouse.

In terms of further development, one element that could be added to our projection could be some kind of interactive feature. People like to investigate interactive projections much more that non-interactive ones, where parts of the projection interact with their movements, so to get our information across better, and to transform Stonehouse further our projection could be developed to react to people's movements infront of the projection.

Ultimately our final project gives a clear message and works effectively incorporating Arduino technology and ideas we have developed throughout the year. I am happy with the project we have created and feel it is a sucessful development for our site in Stonehouse.

In terms of our project I am quite happy with what we have managed to achieve with the Arduino. Although we have been unable to implement it properly as of yet this was to be expected and so achieving a working model as we did was good. There are however a few elements of the final project that could be improved or developed further. Firstly the concept of communication through light was good, but I feel our final project strayed slightly from this as the use of text meant that we were communicating regardless of the use of light. The only part light had to play in our project was the use of the projection and so the involvement of light with communication could have been developed further. As well as this the information we used for the project could have been better researched in terms of people's opinions and words that they felt would be opposite to the feel of Stonehouse. The words we used were chosen based on our group's personal opinion of the area and other opinions we had gathered over the year. Although these opinions seemed to be the general consensus we did not find out the opinions of the general public in the area and so we may have misjudged how people feel about Stonehouse.

In terms of further development, one element that could be added to our projection could be some kind of interactive feature. People like to investigate interactive projections much more that non-interactive ones, where parts of the projection interact with their movements, so to get our information across better, and to transform Stonehouse further our projection could be developed to react to people's movements infront of the projection.

Ultimately our final project gives a clear message and works effectively incorporating Arduino technology and ideas we have developed throughout the year. I am happy with the project we have created and feel it is a sucessful development for our site in Stonehouse.

Tuesday, 13 April 2010

Stonehouse - The projection finalisation demo

The final stage of our Transforming Stonehouse module was to create a demo of our project idea based on what we had worked on over the year which demonstrated what we would plan to do and how if we were able to properly implement it into our site location.

Our idea for our project was to use arduino connected to a light sensor to provide information as to whether a pedestrian crossing button had been pressed. When a pedestrian presses the button on a crossing a light appears on the console, with either usually a 'WAIT' or pedestrian symbol. By using a light sensor we could tell when this had been switched on and so when the button had been pressed. The data from the light sensor would then both control and trigger a projection. The projection would be run from a Flash app in which the data from the arduino would be sent to the application and then this would be used to modify and control it. If the data said that the light was on a selection of words would be projected linking to our idea of communication through light. The number of words shown would be based on the length of time between the light being switched on. The words shown have been chosen to counter act the current opinions of the Stonehouse area by being more positive, encouraging the viewers to think more positively and so improving how people feel in the Stonehouse area. Below is a video demonstrating how the system works using the arduino with light sensor, a computer and a projector but in a classroom environment as we currently have not be able to implement it due to its requirements:

Transforming Stonehouse Demo from Rebecca Veater on Vimeo.

The plan for the projection would be that only the words would be shown and could be projected either on the nearby wall, or on the floor in front of the pedestrians. As well as making people feel more positive the system is also designed to prevent pedestrians from crossing the road at incorrect times when the traffic has not actually been stopped. Often people press pedestrian crossing buttons but the cross before the traffic has been stopped for them, leaving drivers stopped for no reason. By preventing people from crossing without signal by distracting them with the projection this should help to make the traffic lights and pedestrian crossing more effective and efficient.

To make this working demo we used a open source Arduino - Flash library called Glue. From this (or from the Arduino software) we were able to download the FirmataStandard arduino script onto the board which allowed us to communicate with the arduino directly from Flash making it easier to connect the data to the projection image. The projection was then made in Flash using additional AS3 script and a text file which was parsed into the flash file to provide the list of words. To be able to run the projection we also needed to have the proxy server running that came with the Glue software. This acted as a server between the arduino and the flash from within the computer, allowing for the data to be transferred and the projection to be controlled.

Our idea for our project was to use arduino connected to a light sensor to provide information as to whether a pedestrian crossing button had been pressed. When a pedestrian presses the button on a crossing a light appears on the console, with either usually a 'WAIT' or pedestrian symbol. By using a light sensor we could tell when this had been switched on and so when the button had been pressed. The data from the light sensor would then both control and trigger a projection. The projection would be run from a Flash app in which the data from the arduino would be sent to the application and then this would be used to modify and control it. If the data said that the light was on a selection of words would be projected linking to our idea of communication through light. The number of words shown would be based on the length of time between the light being switched on. The words shown have been chosen to counter act the current opinions of the Stonehouse area by being more positive, encouraging the viewers to think more positively and so improving how people feel in the Stonehouse area. Below is a video demonstrating how the system works using the arduino with light sensor, a computer and a projector but in a classroom environment as we currently have not be able to implement it due to its requirements:

Transforming Stonehouse Demo from Rebecca Veater on Vimeo.

The plan for the projection would be that only the words would be shown and could be projected either on the nearby wall, or on the floor in front of the pedestrians. As well as making people feel more positive the system is also designed to prevent pedestrians from crossing the road at incorrect times when the traffic has not actually been stopped. Often people press pedestrian crossing buttons but the cross before the traffic has been stopped for them, leaving drivers stopped for no reason. By preventing people from crossing without signal by distracting them with the projection this should help to make the traffic lights and pedestrian crossing more effective and efficient.

To make this working demo we used a open source Arduino - Flash library called Glue. From this (or from the Arduino software) we were able to download the FirmataStandard arduino script onto the board which allowed us to communicate with the arduino directly from Flash making it easier to connect the data to the projection image. The projection was then made in Flash using additional AS3 script and a text file which was parsed into the flash file to provide the list of words. To be able to run the projection we also needed to have the proxy server running that came with the Glue software. This acted as a server between the arduino and the flash from within the computer, allowing for the data to be transferred and the projection to be controlled.

Stonehouse - Projection Content

The final element that needs to be considered in terms of the finalisation of our project is the content which will be shown through the projection and its relevance to our project. All the way through the project we have been looking at light and then developed this into the concept of communciation through light. We therefore felt that this would be the correct route to follow. There are a range of things that can be communicated, and also a range of ways in which this can be done. The projection aspect of the project covers the concept of light as a means of communication but the content that the light shows can be in different forms such as text, image, shape, line, colour, number etc. The data that this is then connected to can also be various such as facts, values, thoughts, opinions, words. In particular we were interested in making a comment on the opinions of Stonehouse at the current time. Most people seem to feel that Stonehouse is not a very nice location in that it is dark, dank and run down, as well as being an area for crime. We therefore felt it would be a good idea to demonstrate the opposite of these opinions to try and brighten the opinions and feelings towards the Stonehouse area. We decided the way to do this was to display words on our projection opposite to those that might be considered as an opinion of Stonehouse, which viewers would see and read, and so this may give them a more positive outlook to the environment. The number of these words shown will be based on the length of time between a press of the pedestrian button and when the pedestrian light was last lit. The following list is the positive words that we will use for our project:

- STONEHOUSE

- HAPPY

- NICE

- BEAUTIFUL

- CREATIVE

- IMAGINATION

- INSPIRATION

- COLOURFUL

- BRIGHT

- LIGHT

- GREEN

- NEW

- CONFIDENT

- STRONG

- REGENERATION

- QUALITY

- CALM

- GREAT

- POWERFUL

- DESIRABLE

- LUXURY

- ATTRACTIVE

- LIFE

Monday, 12 April 2010

Stonehouse - Our project outline

Once we had decided on the various elements of our project we then had to outline how it was going to work and then create it. The following is an image showing how our system would be placed in our site area:

There is also the option of choosing to place the projection on the floor in front of any pedestrians at the crossing as this would be more obvious to them and possibly catch their attention more easily although they may find it easier to continue to cross at the wrong time as they would not have to move away from their position at all. Obviously this set up is quite difficult to implement successfully in our current situation and would take a lot more work to integrate permentantly to the setting. However the general idea can be made as a mock up to show the basic physicality of the project, including the working functions of the arduino.

Subscribe to:

Posts (Atom)